Low-Latency HLS vs. WebRTC: What to Know Before Choosing a Protocol (Update)

December 13, 2021

You’re dead set on low-latency streaming. But not all low-latency protocols are created equal. So, how do you decide which one is right for your use case? The last thing you want is to build a solution, send it to QA, and then discover that it’s full of glitches and won’t stop buffering. There’s also the financial factor: Some protocols cost you more money when you use them in a certain way. This post will look at Low-Latency HTTP Live Streaming (HLS) and Web Real-Time Communications (WebRTC), the top two low-latency protocols, and what you need to know to choose the right one.

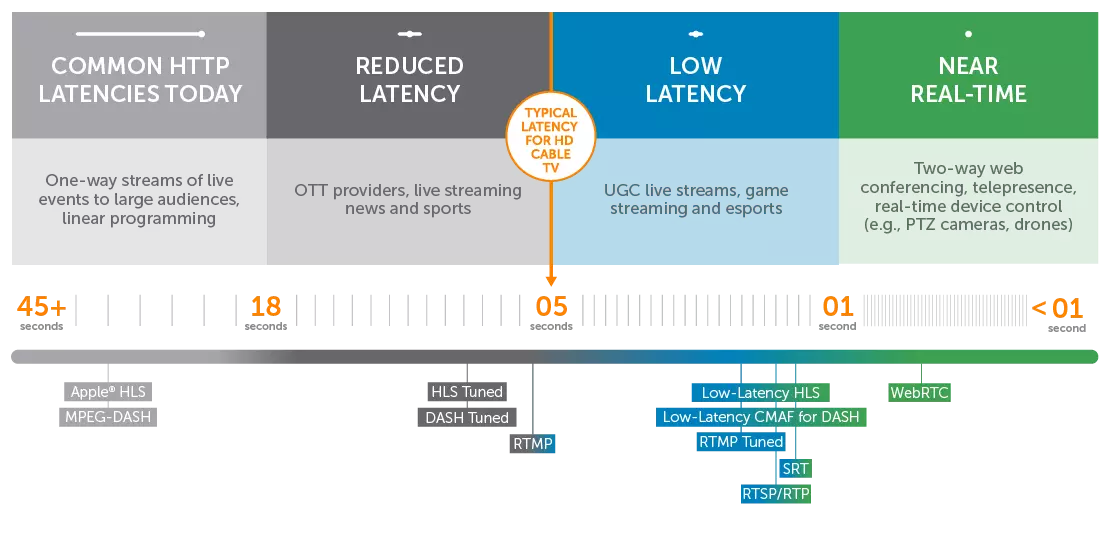

Latency

Is WebRTC vs. HLS truly a battle of speed? Before the Apple Worldwide Developer Conference (WWDC) in 2019, that would’ve been a hard no. The HLS protocol has a lot of great attributes that’ve led to its mass popularity. Reduced latency, however, didn’t used to be one of them. In fact, Apple intentionally built latency into the technology to improve quality and scale. Historically, one could expect 10- to 45-second latency with HLS — far too high to be used for interactive streaming.

This all changed when Roger Pantos announced Low-Latency HLS (LL-HLS) during WWDC 2019, and again when he announced that LL-HLS and HLS were no longer two separate streaming protocols. Since then, the LL-HLS extension had been baked into the HLS specification as a feature set. These back-to-back announcements made it clear that Apple had thrown its hat in the ring, and the industry rushed to support the new low-latency feature.

The new-and-improved HLS has a latency of 3 seconds or less. This is very fast, and suitable for most low-latency use cases. But if you truly need the fastest option and your use case requires real-time streaming, WebRTC still reigns supreme.

WebRTC is the fastest protocol on the market clocking in at sub–500 milliseconds delivery. Whether it’s to ensure that a conversation flows naturally, or doctors maintain control of remote surgical devices, this speed is often critical.

WebRTC was built with bidirectional, real-time communication in mind. Unlike HLS, which is built with TCP, WebRTC is UDP-based. This means that WebRTC can start without requiring any handshake between the client and the server. As a result, WebRTC is speedier but also more susceptible to network fluctuations.

Flexibility and Compatibility

When it comes to which protocol has better flexibility, LL-HLS certainly muddies the waters. As the most widely used p

When it comes to which protocol has better flexibility, it all depends on what your workflow requires.

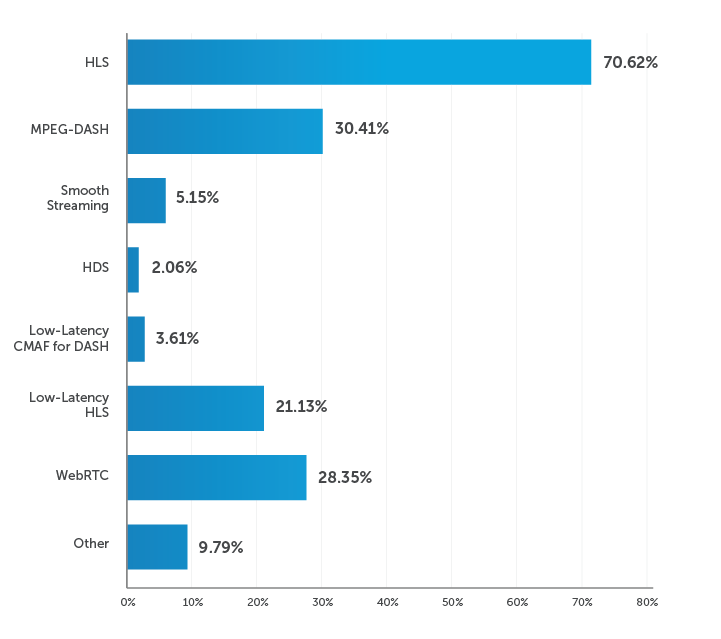

As the most widely used protocol for media streaming, HLS is supported by a wide range of devices and browsers, and it’s fully compatible with a content delivery network (CDN). For these reasons, more than 70% of participants in our 2021 Video Streaming Latency Report indicated that they use HLS for delivery.

Which streaming formats are you currently using for delivery?

Another perk of HLS is its support for closed captions and subtitling, metadata, Digital Rights Management (DRM), and ad insertions. That said, the LL-HLS extension isn’t quite there yet. These are capabilities that people will need, but it’ll take time for the industry to get them working within the ecosystem. However, no one can ignore Apple’s ecosystem for long. Their clout will push development work for LL-HLS. Our guess is that the availability of capabilities like these won’t lag too far behind.

Browser support for LL-HLS is par for the course and shouldn’t change from what you do with HLS. Player compatibility is another issue, though. Other than Apple’s native player, compatibility isn’t expected for other open-source native players such as Android’s ExoPlayer. Companies such as THEO and JW Player, however, have been developing their own proprietary players to help with playback on all major devices, platforms, and browsers. Moreover, any players that aren’t optimized for Low-Latency HLS can fall back to standard (higher-latency) HLS behavior.

On the other hand, a major strength of WebRTC is that no additional plug-ins or software is required for it to function within the browser. Today, all major desktop browsers support it, although some browsers are still working through a few bugs.

The truth is, there’s no clear winner between these two protocols in terms of flexibility and compatibility right now. LL-HLS still needs a lot of work, and WebRTC feels as though it’s a perpetual work in progress. Until LL-HLS can catch up to HLS, you’ll need to look very closely at what you’re trying to build and what level of flexibility you require in order to make the right choice.

Quality

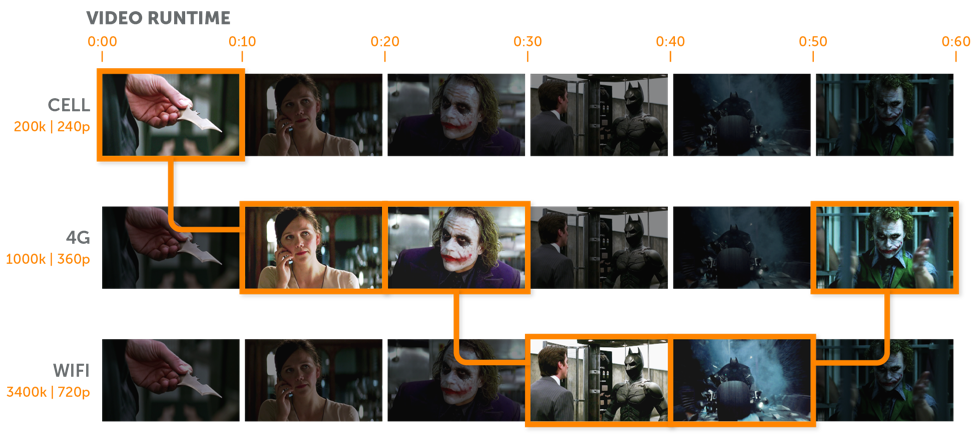

For most video workflows, high-quality delivery is a product of adaptive bitrate (ABR) capabilities. It’s the secret sauce to professional streaming. ABR provides the best video quality and viewer experience possible — no matter the connection, software, or device.

In terms of quality, LL-HLS takes the cake. HLS is the standard in ABR video, and that includes LL-HLS as well. Multiple renditions allow for playback on different bandwidths. The media server then sends the highest-quality stream possible for each viewer’s device and connection speed. A delivery method that automatically adjusts the quality of the video stream between multiple bitrates and/or resolutions is far better than one that only operates at a single bitrate.

WebRTC, on the other hand, wasn’t built with quality in mind. WebRTC’s number one priority has always been real-time latency for peer-to-peer browser connections. As a result, quality takes a back seat.

WebRTC does offer simulcasting on the contribution end, but this doesn’t translate to different bitrate options for viewers. Instead, the lowest common denominator is often the safest bet for last-mile delivery. Why? Simple: a low bitrate stream will safely play back on the widest range of devices and network conditions.

Security

Long before the word Zoombombing made it into Merriam-Webster, privacy issues were a big issue in the industry. Content protection throughout the workflow can take many forms, including encryption of incoming and outcoming streams, token authentication, and digital rights management (DRM) for premium content delivery.

Because the LL-HLS spec has been merged into the HLS spec, it supports everything that the HLS spec does in theory. But as mentioned above, it’ll take time for providers to catch up. Capabilities such as DRM, token authentication, and key rotation will all be possible but not available until providers get them working within their ecosystems. LL-HLS isn’t completely unprotected, however. TLS 1.3 is recommended as part of the spec. It’s encrypted by nature, and it prevents hackers from intercepting data in transit.

WebRTC also supports delivery over TLS to ensure the security of content in transit. The traffic between the two clients is encrypted, which enhances security. Although WebRTC lacks compatibility with DRM providers, if you’re looking for basic security measures, the encryption WebRTC offers will be enough. Things left out of the scope of WebRTC are authentication, authorization, and identity management. This doesn’t mean you can’t add them — you can, and you should. It just means that they’re not offered out of the box.

Scalability

Scaling WebRTC

If your solution requires peer-to-peer, real-time streaming, WebRTC is most likely the protocol you’ll want to use. But it used to be a no-go when broadcasting to anything north of ~50 viewers. Luckily, Wowza’s video platform offers three options for overcoming these restraints.

1. Real-Time Streaming at Scale for Wowza Streaming Cloud

Our newest Real-Time Streaming at Scale feature for Wowza Streaming Cloud deploys WebRTC across a custom CDN to provide near-limitless scale. Content distributors can stream to a million viewers in less than half a second, thus combining real-time delivery with large-scale broadcasting.

2. WebRTC Powered by Wowza Streaming Engine

By connecting all participants to a live streaming server like Wowza Streaming Engine, content distributors benefit from real-time streaming at a larger scale, while optimizing bandwidth by minimizing the number of connections each client must establish and maintain. Additional infrastructure would be needed to scale beyond several hundreds of viewers, in which case Real-Time Streaming at Scale with Wowza Streaming Cloud would be a better route.

3. Converting WebRTC to HLS or DASH

Finally, a live streaming server or cloud-based service can be used to transcode the WebRTC stream into a protocol like HLS for distribution to thousands. That way, content distributors can combine simple, browser-based publishing with large-scale broadcasting — albeit at a higher latency. When easy content acquisition (and not real-time delivery) is the key reason for incorporating WebRTC into your streaming workflow, then this solution is the way to go.

Scaling Low-Latency HLS

Before integration with CDNs, scaling LL-HLS was merely aspirational and extremely limited. Vendors have spent the past couple of years developing for the ever-evolving spec, and they’ve recently begun announcing their support. Technology partners such as Fastly allow for global distribution and reduced latency, due to more cached content closer to the viewer. Although the delay is longer than that with WebRTC, CDNs make it possible to stream LL-HLS to thousands in less than 3 seconds. That said, development is still ongoing and large-scale implementations of LL-HLS remain few and far between.

Cost

WebRTC is an open-source protocol, and it’s free. Great! But while scaling WebRTC is totally doable even at sub-500 seconds, you’ll have to pay the price for a custom WebRTC CDN.

LL- HLS is more cost-effective — using affordable HTTP infrastructures and existing TCP-based network technology. That said, it’s a less mature technology that comes with a higher latency. And when sub-second streaming is crucial, WebRTC remains your only option.

Conclusion

LL-HLS and WebRTC have come a long way in the last couple of years. Even though they’re both cutting-edge technologies and both driving the industry forward, each has its pros and cons. Neither option is perfect for everything, but one of the LL-HLS and WebRTC have come a long way in the last couple of years. Even though they’re both cutting-edge technologies driving the industry forward, each has its pros and cons. Neither option is perfect for everything, but one of the two can be the perfect solution for you. Ultimately, the best protocol will depend on the specifics of your project, the devices you plan to distribute to, and the size of your audience. Make sure to keep these things in mind when building your low-latency solution.